Popular Tech Company Cut Processing Time and Data Processing Costs by 50% with Delta Lake

Customer Details:

Client Name: Under NDA

Industry: Technology Company

Development Country: Arizona

Solution: Incremental Processing

Used Services: Delta Laka, Data Bricks

The renowned technology company embraces Delta Lake solutions, revolutionizing their data processes, reducing time and cost inefficiencies, and achieving remarkable data flexibility and features.

Overview:

The web hosting industry giant, catering to a vast clientele of 21 million customers worldwide, confronted a significant challenge in efficiently processing its ever-expanding data. With an extensive array of services, including domain names, website hosting, email marketing, and professional solutions, the company accumulated a massive amount of data.

To tackle the data processing bottleneck and optimize data management, the web hosting company joined forces with VirtueTech. This strategic collaboration aims to streamline data processing times while simplifying data storage, space, and maintenance. By leveraging VirtueTech’s expertise, the company seeks to enhance its data infrastructure and continue providing seamless services to its millions of customers globally.

The Challenge of Web Hosting Company:

Here are the challenges that web hosting company was facing:

| Parameters | Challenges |

| Scale of Operations | As the world’s largest domain registrar with 21 million users, the company faces significant challenges in handling vast amounts of financial transactions and data on a daily basis. |

| Time-Consuming Data Updates | Incorporating new data into the dataset and updating it daily results in extensive processing times, causing complete downtime for end-users during the update process. |

| Risk of Data Problems | Processing the complete dataset daily poses a high risk of data loss, incomplete updates, failures, delays, and glitches, impairing the ability of business analysts and end-users to identify and leverage critical business opportunities. |

| Impact on Efficiency and Productivity | The extended downtime during data updates hinders the end-users’ productivity and efficiency, leading to potential business losses and user dissatisfaction. |

| Need for a Scalable Solution | The domain hosting organization must adopt a scalable solution that allows seamless integration of new data without affecting the availability of existing data, ensuring continuous operations and timely insights for business analysts and end-users alike. |

As the world’s largest domain registrar company with 21 million users, the web hosting company was facing challenges in processing its everyday’s data. As a domain hosting organization, the company was incorporating financial transactions and data everyday. The data was then gathered and processed in one dataset which was used by business analysts for project status, resolving any issues and tracking progress.

The mud here was that when new data comes in every day, the complete dataset needs to be updated, which takes a lot of time to process and update. Moreover, during the data update in the dataset, the old data was unavailable for the end user to use. The end-user was facing complete downtime during the update process, which resulted in a loss of efficiency and productivity.

Additionally, processing the complete dataset daily carries a high risk of data problems such as loss, incomplete data updates, failures, delays, and glitches. Such high risk impairs the company’s end users and business analysts’ ability to promptly identify business opportunities and other business developments.

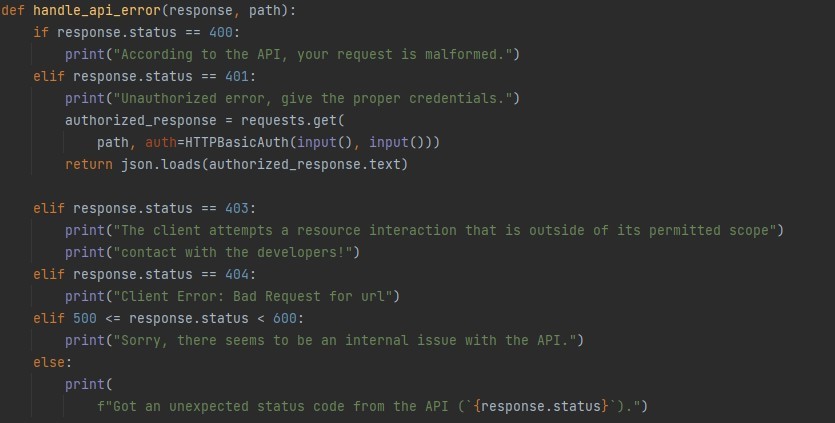

The Agile Solution for Web Hosting Company

Here’s how VirtueTech Inc. provides solutions that help web hosting company with the following advantages and solutions for their challenges.

| Parameters | Solution |

| Delta Lake | VirtueTech Inc. introduces Delta Lake to the web hosting company, enabling flexibility and automation within the dataset, leading to enhanced efficiency without downtime. |

| Flexible and Available Dataset | Delta Lake ensures that existing data remains accessible while updating new data, allowing end-users to utilize the dataset without interruption. |

| Time and Resource Savings | Unlike traditional methods, incremental processing focuses only on new or changed data, reducing processing time by up to 50% and optimizing resource utilization. |

| Mitigating Data Risks | By avoiding daily processing of the entire dataset, incremental processing minimizes the chances of data glitches and loss, resulting in cost savings and improved data integrity. |

| Limitless Storage and Data Analysis | Incremental processing eliminates duplicate data preservation, unlocking storage and maintenance opportunities. It also captures and records daily data changes, enabling detailed analysis of past data and identifying specific changes over time. |

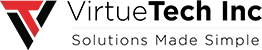

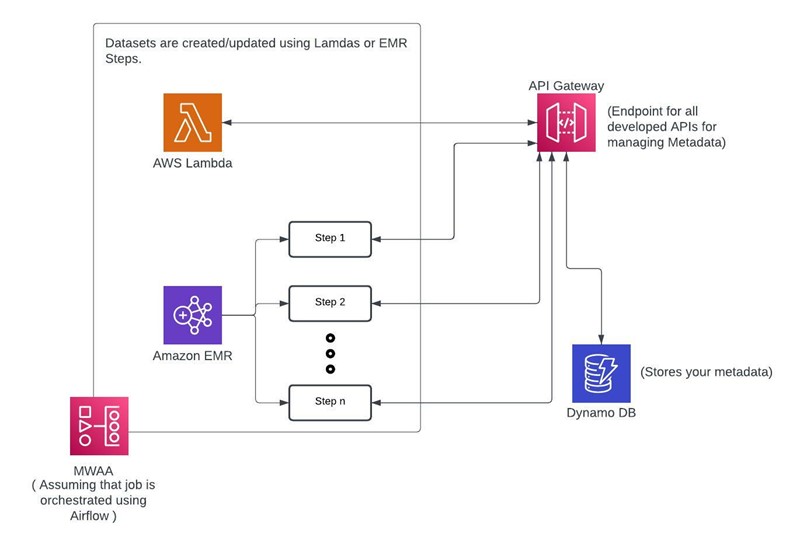

VirtueTech Inc. helped web hosting company with asset properties of Delta Lake that provide Flexibility and automaticity across the dataset. This approach of Delta Lake drives Flexibility, availability, and automaticity within the dataset, allowing the end users to enhance their efficiency without any downtime.

Moreover, Delta Lake makes life easier for business analysts as it brings more Flexibility to the dataset by updating data without hindering the old existing data. That means Delta Lake only processes and updates the new data that comes into the dataset daily, and the rest of the data stays as it is, which is available for the end user even during the update.

Traditionally, data processing involves processing the entire dataset, which can take hours and require a lot of resources. However, with incremental processing, only the new or changed data is processed, which can be done in a few minutes. This can save up to 50% of the processing time and make the data available for use much faster.

Moreover, incremental processing reduces the risk of data glitches and loss as organizations do not need to process entire datasets daily. Such advantages reduce the overall data processing cost by 50% and expenses that occurred during data failures and glitches.

Additionally, there are a few more advantages of incremental processing at web hosting company. The process unlocked limitless storage, space, and maintenance opportunities by reducing duplicate data preservation.

Moreover, it also captures and snaps everyday data changes in the dataset that help GoDaddy analyze past data. The capture of transactions aids organizations in going back in time to see how the data looked a few days ago and helps them mark and identify the changes that came and what data exactly looked like.

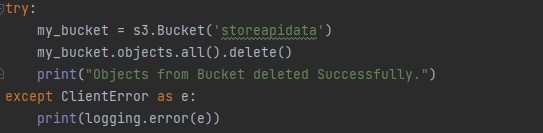

The Consequences of Solutions Developed From Delta Lake.

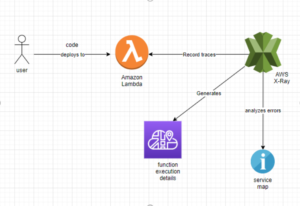

The web hosting company implemented the Delta Lake solution in January 2023 in only a few of their datasets, which delivered a 50% cost reduction in data processing and a 50% time reduction. They have more than 100 datasets in which Virtue Tech Inc. will implement Delta Lake capabilities, resulting in significant cost and time reductions for the technology company in the future.

Overall Benefits of Delta Lake:

- Cost reduction on data processing operations by up to 50%.

- Reduction in data processing time of up to 50%.

- Unlock limitless opportunities for storage, space, and maintenance.

- Increase business analyst efficiency.

- Improved data availability capacity by up to 10 times.