GoDaddy, the domain registration and management giant, is now migrating their majority of their infrastructure and data warehouse to AWS. GoDaddy group decided to go with AWS due to its deep experience in delivering a highly reliable global infrastructure, as well as an unmatched track record of technology innovation, to support their rapidly expanding business.

The Challenge

GoDaddy, with its web hosting and world’s largest domain name registration business manages over 57 million domains worldwide. It ingests on a daily basis over 20 terabytes of new, uncompressed data everything, from website traffic and usage metrics to server management and ecommerce statistics. All that data is used to configure products and provide client services to its 14.7 million customers which ranges from major corporations to small businesses. This data comes from many known and unknown sources with highly varied formats and disparate meanings and uses. There are conflicts and inconsistent or contradictory phenomena among data from different sources. In the case of small data volume, the data can be checked by a manual search or programming, even by ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform). However, these methods are useless when processing such huge data volume. This is a great challenge to the existing techniques of data processing quality.

Why Amazon Web Services

Building a data lake in the cloud eliminates the costs and hassle of managing the necessary infrastructure required in an on-premises data center. That’s why VirtueTech recommended GoDaddy to go with AWS Cloud, as it offers even more benefits by virtue of their broad portfolio of services that offer options for building a data lake as well as maintaining the quality of data. That includes Amazon Simple Storage Service (Amazon S3) for storing data in any format, securely, and at massive scale. Deequ, which is used internally at amazon, can be used to define and verify data quality constraints, and be informed about the changes in data distribution.

Running Critical Applications on AWS

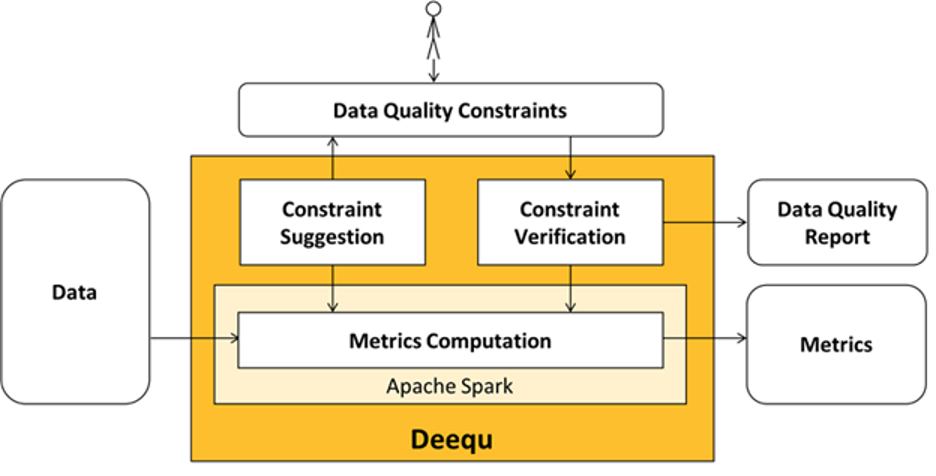

GoDaddy is evaluating Data Quality using PyDeequ, an open-source Python wrapper over Deequ (an open-source tool developed and used at Amazon) to make sure that the data used ultimately is relevant and trustworthy. PyDeequ uses Spark to read from sources such as Amazon Simple Storage Service (Amazon S3), and computes data quality metrics such as completeness, maximum, or correlation through an optimized set of aggregation queries. In addition to that, it also checks for constraints that are to be verified. It generates a data quality report, which contains the result of the constraint verification. PyDeequ can also analyze the whole dataset or only its part, and suggest you the validation constraints from there. In case of data quality failure, the downstream process is stopped and an email is sent to the producer team using Amazon Simple Email Service (SES). This report consists of an attachment notifying the team, what has failed, and why it failed.

GoDaddy PyDeque System Configuration Diagram

The Benefits

Improved data quality has led to better decision-making across the organization. Users can easily discover high-quality data in optimized formats, and teams are reporting reduced latency for their analytics results. Incomplete or inconsistent data takes significant amounts of time fixing that data to make it useable. This takes time away from other activities and means it takes longer to implement the insights the data uncovered. Quality data is also helping to keep your company’s various departments on the same page so that they can work together more effectively. This also led to increased profitability of the company, as business insights are now much more reliable and efficient.